Product Details

+

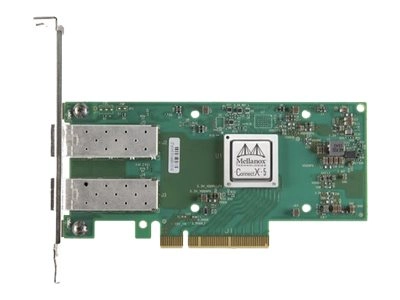

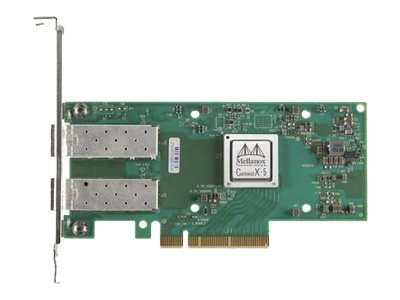

Lenovo ThinkSystem Mellanox ConnectX-5 EN - Network adapter - PCIe 3.0 x8 low profile - 10/25 Gigabit SFP28 x 2 - for ThinkSystem SR630 7X01, 7X02; SR650 7X05, 7X06

- Cloud and Web 2.0 environments

ConnectX-5 adapter cards enable data center administrators to benefit from better server utilization and reduced costs, power usage, and cable complexity, allowing for more virtual appliances, virtual machines and tenants to co-exist on the same hardware. Cloud and Web 2.0 customers developing platforms on Software Defined Network (SDN) environments are leveraging the Virtual-Switching capabilities of the server operating systems to achieve maximum flexibility. Open vSwitch (OvS) is an example of a virtual switch that allows Virtual Machines to communicate with each other and with the outside world. Traditionally residing in the hypervisor where switching is based on twelve-tuple matching onflows, the virtual switch, or virtual router software-based solution, is CPU-intensive. This can negatively affect system performance and prevent the full utilization of available bandwidth. Mellanox ASAP2 (Accelerated Switching and Packet Processing) technology enables offloading the vSwitch/vRouter by handling the data plane in the NIC hardware, without modifying the control plane. This results in significantly higher vSwitch/vRouter performance without the associated CPU load. - Storage environments

NVMe storage devices are gaining popularity by offering very fast storage access. The evolving NVMe over Fabric (NVMe-oF) protocol leverages the RDMA connectivity for remote access. ConnectX-5 offers further enhancements by providing NVMe-oF target offloads, enabling very efficient NVMe storage access with no CPU intervention, and thus improving performance and reducing latency. The embedded PCIe switch enables customers to build standalone storage or Machine Learning appliances. As with earlier generations of ConnectX adapters, standard block and file access protocols leverage RoCE for high-performance storage access. A consolidated compute and storage network achieves significant cost-performance advantages over multi-fabric networks. ConnectX-5 enables an innovative storage rack design, Host Chaining, which enables different servers to interconnect without involving the top-of-rack switch. Leveraging Host Chaining, ConnectX-5 lowers the data center's total cost of ownership by reducing CAPEX (cables, NICs, and switch port expenses). OPEX is also reduced by cutting down on switch port management and overall power usage. - Storage environments

NVMe storage devices are gaining popularity by offering very fast storage access. The evolving NVMe over Fabric (NVMe-oF) protocol leverages the RDMA connectivity for remote access. ConnectX-5 offers further enhancements by providing NVMe-oF target offloads, enabling very efficient NVMe storage access with no CPU intervention, and thus improving performance and reducing latency. The embedded PCIe switch enables customers to build standalone storage or Machine Learning appliances. As with earlier generations of ConnectX adapters, standard block and file access protocols leverage RoCE for high-performance storage access. A consolidated compute and storage network achieves significant cost-performance advantages over multi-fabric networks. ConnectX-5 enables an innovative storage rack design, Host Chaining, which enables different servers to interconnect without involving the top-of-rack switch. Leveraging Host Chaining, ConnectX-5 lowers the data center's total cost of ownership by reducing CAPEX (cables, NICs, and switch port expenses). OPEX is also reduced by cutting down on switch port management and overall power usage. - Telecommunications

Telecommunications service providers are moving towards disaggregation, server virtualization, and orchestration as key tenets to modernize their networks. Likewise, they're also moving towards Network Function Virtualization (NFV), which enables the rapid deployment of new network services. With this move, proprietary dedicated hardware and software, which tend to be static and difficult to scale, are being replaced with virtual machines running on commercial off-the-shelf (COTS) servers. For telecom service providers, choosing the right networking hardware is critical to achieving a cloud-native NFV solution that is agile, reliable, fast and efficient. Telco service providers typically leverage virtualization and cloud technologies to better achieve agile service delivery and efficient scalability; these technologies require an advanced network infrastructure to support higher rates of packet processing. However, the resultant east-west traffic causes numerous interrupts as I/O traverses from kernel to user space, eats up CPU cycles and decreases packet performance. Particularly sensitive to delays are voice and video applications which often require less than 100ms of latency. ConnectX-5 adapter cards drive extremely high packet rates, increased throughput and drive higher network efficiency through the following technologies; Open vSwitch Offloads (OvS), OvS over DPDK or ASAP2, Network Overlay Virtualization, SR-IOV, and RDMA. This allows for secure data delivery through higher-performance offloads, reducing CPU resource utilization, and boosting data center infrastructure efficiency. The result is a much more responsive and agile network capable of rapidly deploying network services.

- Hardware-based reliable transport

- Collective operations offloads

- Vector collective operations offloads

- Mellanox PeerDirect RDMA (aka GPUDirect) communication acceleration

- 64/66 encoding

- Extended Reliable Connected transport (XRC)

- Dynamically Connected Transport (DCT)

- Enhanced Atomic operations

- Advanced memory mapping support, allowing user mode registration and remapping of memory (UMR)

- On demand paging (ODP)

- MPI Tag Matching

- Rendezvous protocol offload

- Out-of-order RDMA supporting Adaptive Routing

- Burst buffer offload

- In-Network Memory registration-free RDMA memory access